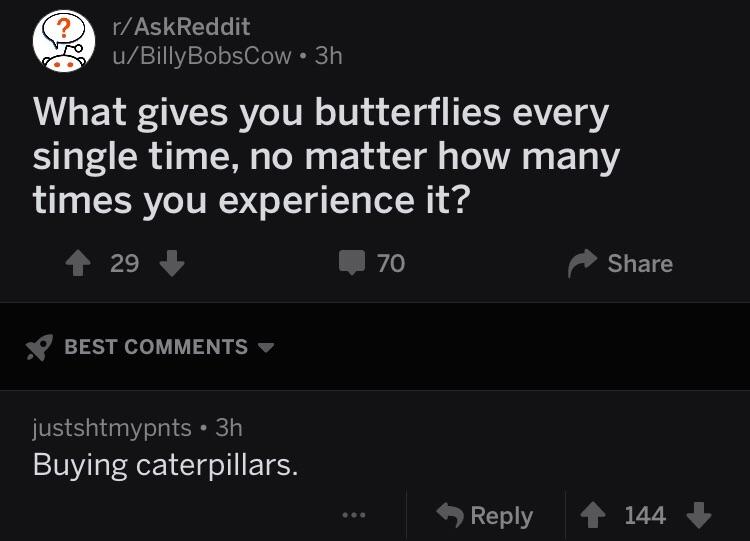

#closeenough

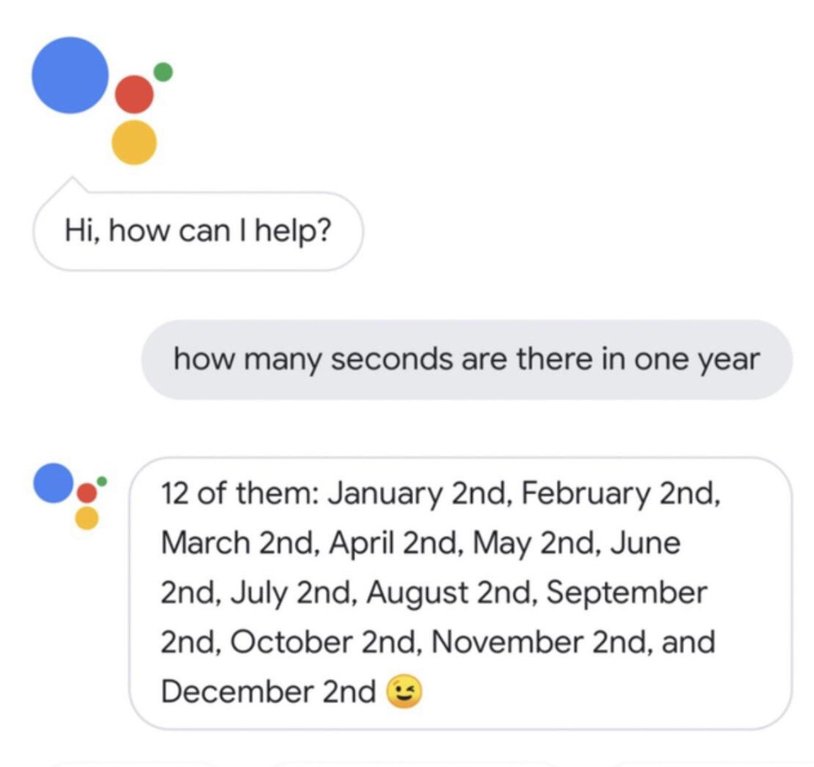

Rule #7 of artificialintelligence: close enough.

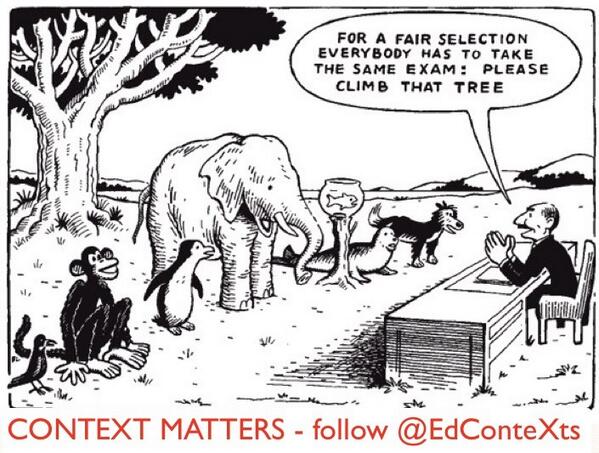

Number 7 is pretty far down the list, but “close enough” is an equally important concept for AI. Have you ever queried Google or Bing and gotten a single entry? Aka “the answer” to your question? No. I know I’ve gotten a single page of items in return (I ask some weird questions) but it always provides a menu of options.

The page ranking algorithms of Google are legendary and as closely guarded as military secrets. They aren’t carved in stone. Indeed the algorithms are manipulated in order to adjust for specific hacks as well as smoothing trends.

But artificial intelligence doesn’t provide solutions like an algebraic math problem. It’s stoic in its reply, showing no emotion and yet posing a voluminous suite of possibilities to be considered by the inquirer. Indifferent to the vicissitudes of fortune, the ai sweeps the oceans of the internet to provide you what is . . . close enough.

Humans calculate those algorithms and only you decide what is “the answer.”

#machinelearning #aibots #algorithms #aibot #deeplearning #ml